Computer Beats Go Champion, Shaking the AI World

Posted by Rampant Coyote on January 28, 2016

The first game I ever played over the Internet (as opposed to a dial-up BBS, waaaay back in the day) was the game of Go. I was learning to play the game as part of a class in AI. Our big project for the semester was to create a competitive Go-playing program. So besides playing other students, I tried this Go server that used Telnet.

The first game I ever played over the Internet (as opposed to a dial-up BBS, waaaay back in the day) was the game of Go. I was learning to play the game as part of a class in AI. Our big project for the semester was to create a competitive Go-playing program. So besides playing other students, I tried this Go server that used Telnet.

It was late at night, and I was playing someone from Korea. Fortunately, he spoke (typed) decent English. We chatted as we played. He knew I was a beginner – he considered himself a beginner, too, but he was FAR more experienced than me. That’s Asia for you. Anyway, he kindly warned me without giving me details that I was in trouble and leaving myself open to his attack, but I couldn’t figure out what he meant. We both saw the same board, but I couldn’t tell what I was doing wrong. I was playing an efficient game, I thought, and I’d been one of the best players in my class.

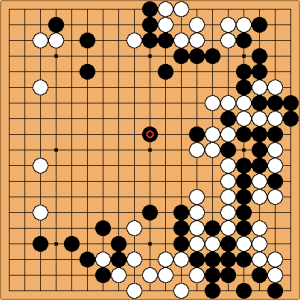

Finally, he made the attack he’d been warning me about. It was devastating. My groups weren’t as safe as I thought they were, and I found myself without any kind of decent plan to or option. The game was over within a dozen or so moves after that. I thanked him for the game, he offered encouragement, and I was able to fix some weaknesses in my play. Just a few. I still have tons. I still suck at Go.

***

The problem with my game was in part a problem with developing AI to play Go. The rules of play are deceptively simple, leaving the complexity in actually playing the game. The possibility space is too large for a brute-force search of probable moves, although with my program for the class competition, I did incorporate some of that. Perhaps more importantly, the challenge is understanding what’s really happening on the board, and recognizing all the relationships between the stones.

That’s really the problem with all of AI – coming up with a way to represent and evaluate the data. Sure, the AI can see the Go Board… 19×19 spaces with 3 possible states for each spot (empty, black stone, or white stone) is no big deal. Playing the game is again no big deal – the rules are easier than those of Chess. But one of the challenges in Go is that even calculating the score can be a little tricky, even for humans. Beginners, in particular, may get into disagreements over the score and have to play things out after the game is over to be certain of the score in a close game.

That’s all stuff the AI has to understand on one level or another The key to playing Go is to understand those relationships. There’s no single good way to do this. For our projects in my AI class, the sample concepts that we had to work with included weighting of squares (it’s easier to find safety and to control space working near the corners and edges than in the center), armies (groups of connected stones), influence, liberties, and detecting “eyes” (liberties that are unassailable by the opponent – if a group has two eyes, it’s impossible to capture). These were all relatively complex topics on their own, and prone to errors. Then trying to get the AI to work with all of these in some sensible format was another hurdle. And, finally, these were only the beginning.

I got a little obsessed over this when I was working on it. I’d walk to school obsessing over influence maps and better ways of recognizing patterns on the board. But even so, I realized that for truly high-level play, there was more to it than a fallible human would have to devise and explain. At some point, the machines would have to use more generalized pattern recognition and machine learning to master the game. While I always thought it was possible, it also always seemed distant. Even as modern Go-playing programs could kick my butt with ease, there was still a long way to go.

***

This week, a Google-sponsored system called AlphaGo defeated European champion Fan Hui. Not just defeated, but shut him out, 5-0. While he’s not on the level of the Asian masters, this is definitely a big deal, and not just within the limited field of one of the most popular – and most ancient – actively played games.

Chess was a different animal, solved by a very different strategy. The fundamental approach (albeit enhanced by several other methods) was a search of a problem space, plus pruning tricks to make it more efficient. Even so, it took decades from the point at where it was first capable of beating normal human to the point at which Deep Blue was able to truly go toe-to-toe against Gary Kasparov. Chess was easier to define in terms of problem space, which in AI is half the problem. After that, it was all optimization. That same AI approach has numerous real-world uses that have been applied for decades in various areas, particularly expert systems, but its application is limited to areas well well-defined and limited problem spaces.

AlphaGo – and the Jeopardy-winning Watson – represent something else. These games represent much wider areas of applicability in the real world. As my AI professor explained it, Chess is a game of tactics representing a single battle. Go is a game of an entire war… and not just war, a coexistence. While you can’t just reprogram AlphaGo to solve world peace or something like that, it does represent a breakthrough in machine learning and pattern recognition that has innumerable real-world applications.

I was getting my butt whupped by Chess programs since the first one I played, so it wasn’t like I felt any loss when Deep Blue first defeated Kasparov. I suck enough at Go that I have been getting beat by Go programs for a long time. I’m not one who takes seriously the concerns of machine consciousness or the coming of SkyNet. I’m not super-worried about machines thinking for us. They are tools, just like levers and backhoes, which magnify human capability. In this case, we get tools to help our brains and not just our muscles. Like a calculator. Only this time, we may end up with a more powerful version of Siri. Somewhere down the road.

But this week – even though there are more tournaments to go – I feel like that puzzle I obsessed over back in college has finally been solved.

That seems pretty cool.

Filed Under: General - Comments: 8 Comments to Read

CdrJameson said,

I vaguely remember from my AI, or possibly my psychology, that one of the main lessons from Chess playing research was that being good at Chess didn’t particularly correlate with being good at anything else. This was a great shame because ‘being good at Chess’ was being used as a proxy for ‘being intelligent’.

Anyway, the Go result is a big win for the informal/heuristic school of AI vs the formal/logical school (which generally get Chess), but there’s always the drawback that using neural nets smacks of magical thinking, i.e. throw enough training and processing layers at it and it’ll magically come up with some way to solve the problem (in this case, provide a strategy and separately a numerical assessment of how good a board position is).

How does it win? No idea, the inputs just go into the magic box and the moves just come out the other end. In a lot of ways it’s an electronic superstition generator.

The really useful trick would be to provide an answer to the question ‘What is ‘enough’ neurons/layers?’ for any given problem, and indeed ‘Is it solvable at all?’. Solving individual problems like Go just highlights that this particular problem is solvable by this particular technique. It isn’t going to bring on the AIpocalypse.

Neural networks of course run mad-fast once they’re trained, which is a big help in this situation. They can be quite blind to things outside their training set though, which is less good. Something as simple as trying a few random opening moves might fry its brain good.

Anyway, well done them. Would just be nice if you could ask the network what its strategic insights actually were. Unfortunately, it doesn’t know.

McTeddy said,

SUCK IT CHAMPION! Now you know how I feel every time I played computer GO against a beginner AI.

That’s actually really cool though. While I’ll never reach the point that such AI affects me, I’m in awe that AI’s come this far.

CdrJameson said,

Next: Computer beats human at Go Fish.

Modran said,

Got into an argument yesterday with someone saying that it wasn’t proper intelligence, just brute force, and that playing to win wasn’t the proper way to play go.

I tried to explain that the IA made choices, made guesses of what his opponent would play, did NOT have all the possibilities (as for chess) and that the goal was to win, yes, but could be modified to look for beauty and harmony…

Sadly, I don’t think I reached him.

Maybe I should have said the goal wasn’t to win, but to reach a state where the IA could make informed guesses as accurate as possible…

Rampant Coyote said,

I’d agree that it’s not “proper intelligence,” but we really don’t understand what intelligence *REALLY* is, so it’s not something one can be objective about.

However, it’s not brute force. Chess is elegant, optimized, brute-force-with-heuristics-and-some-twists. Go is not. That was the whole point.

And yeah – in *ANY* case, the goal is maximizing the chances of a win, but it doesn’t have to be. You can maximize anything.

Mr Horse said,

Perhaps more importantly for game devs would be use of AI to design and test games. Paul Tozour wrote about this here:

http://www.gamasutra.com/view/feature/186088/postmortem_intelligence_engine_.php?page=2

CdrJameson said,

In AI it was a joke that intelligence was anything we didn’t understand how to do yet.

There would never be proper artificial intelligence because any problem we solved wouldn’t count any more.

Using Neural Nets (and simulated genetics) we get around this by solving the problem without understanding it.

Weekly Links #105 « No Time To Play said,

[…] big one this week was that a computer had beaten a world-class GO champion. Which is incredibly meaningful, because it’s not the kind of problem you can solve with more […]